The future is now, people!

While it might seem like crazy inventions are a long way off, we’re already living in it!

And we’re about to get some inside info.

What sounds futuristic but is happening now?

AskReddit users shared their thoughts.

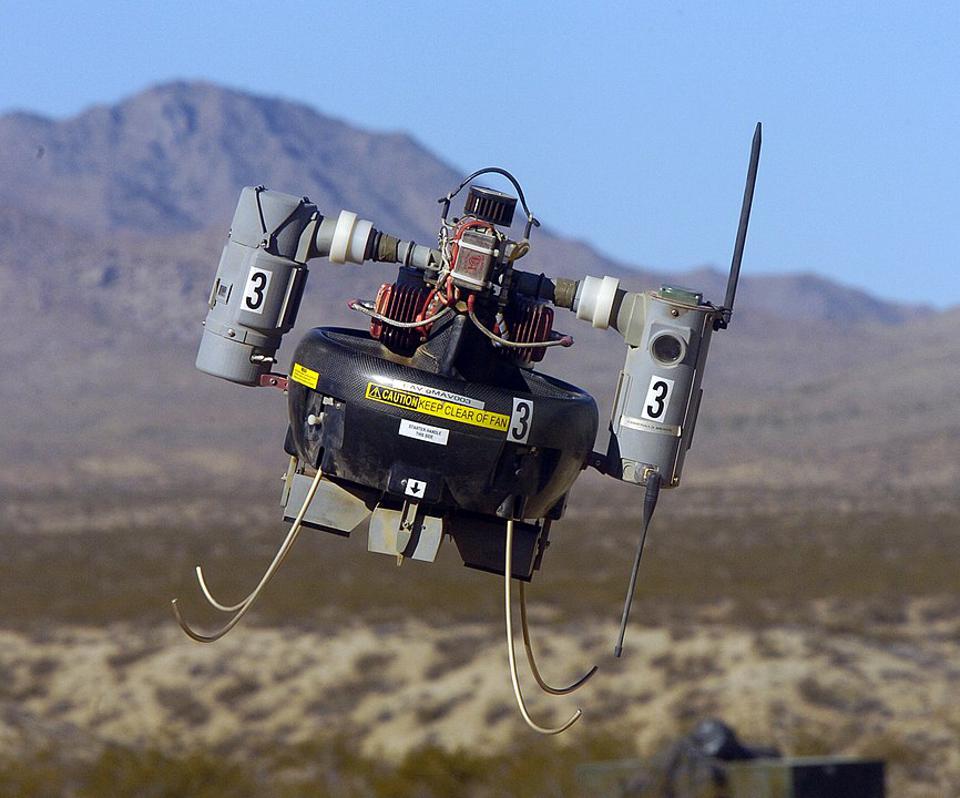

1. Robots.

“It’s quite phenomenal how much certain industries have developed their robotics.

You just don’t see it in your everyday life, but places like Amazon and pretty much all mechanical-related businesses are developing robotics at an insane rate.”

2. Didn’t see it coming.

“I still feel a tiny bit blindsided by lab-grown meat suddenly being commercially viable. Would not have predicted that one.

Those drone light-shows too. What year was it where radio-controlled drones just kind of showed up, and became commonplace?

I think around 2015 was when they really “took off.””

3. It is a big deal!

“Private companies launching rockets into space like it’s no big deal. You can literally walk outside one night and think “what tf is that???”

And someone will tell you “oh that’s just the latest Space X launch.” And you go about your business.”

4. There you go.

“My 2 yr old daughter, walking around the house following and talking to a robot while it vacuums our house.”

5. Pretty insane.

“I have a device that fits into my pocket.

I can get virtually every bit of information produced by the human race if I know what buttons to push.

It’s also voice-activated, so I can just talk to it and figure out when my flight leaves or where the nearest fresh tomatoes are being sold.”

6. Seeing double.

“Scientist have already managed to clone a living thing.

And it happened around 25 years ago.”

7. Open Sesame!

“Automatic doors.

I remember seeing the first 6 Star Wars films many years ago (I’m 18 now) and playing the Lego games of it and I remember thinking to myself how cool the sliding doors were.

I understand they’ve been around for so long, but recently it just came to me that they have automatic doors just like in Star Wars.”

8. Scary.

“In China, they are using AI to identify Uyghur Muslims from the rest of the population.

It detects “classic Uyghur features” based on complexion, and facial features.

It’s the worlds first instance of “automated racial profiling.””

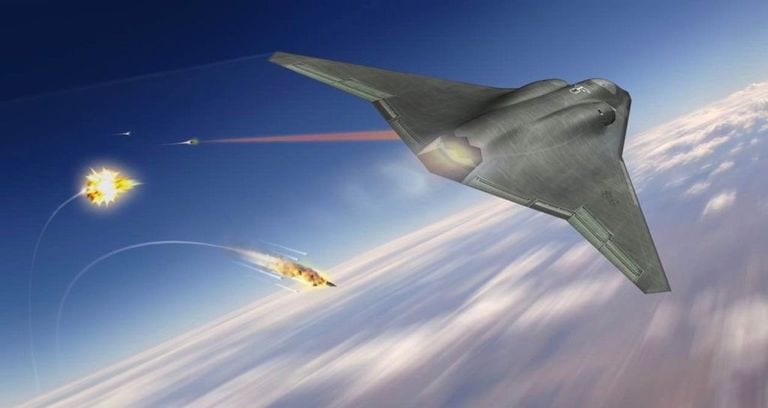

9. Weapons of war.

“Saw the recent news of the Israeli-Hamas conflict, including footage of Iron Dome being used.

I remember seeing the prototypes of that system in a “future weapons” documentary on TV years ago, but was never really sure if or when it entered active service

So yeah, we have rockets that shoot down other rockets now and might have for years already.”

10. Antimatter.

“The fact that humans are capturing and containing antimatter for study is amazing.

The fact that we can store it longer than a year at a time is extraordinary.”

11. That’s crazy.

“Facebook is integrating occlus rift support.

I’m not sure what’s available to the public, but in-house, they are having 3d meetings like in Star Wars. They can project and manipulate a screen in 3D like the 3d map in the first Ray movie. It doesn’t wrap around you yet, it hovers in front, but it will.

My friend works for FB in the AI dpt. The first software I tried out was a demo for medical education. I saw a life size human and I could use gestures to look at his different body systems, right in front of me, like on the Holodeck.

You turn the system on by holding up your palm like a wizard and an interactive sphere appears on your hand.”

12. Gene editing.

“CRISPR gene editing. People’s genes can literally be edited.

Basically, people get this enzyme called Cas9 (a nuclease) inserted into their DNA somehow (don’t know how, maybe an injection?) and Cas9 slices the target strand of DNA open, allowing a sequence to be taken out and replaced with something else which can then be transcripted into RNA, translated into a protein, and used in the body.

It’s mostly being used right now for gene therapy, stuff like sickle cell anemia, and agriculture but it’s crazy to think about what it could be used for in the near future. It’s kind of controversial because people don’t like the unnaturalness of it (like people’s dislike of GMOs), and I can see their point, I just think it is very intriguing and revolutionary, and I will be interested in seeing what happens with it in the future.”

Now it’s your turn to sound off.

In the comments, tell us about things you know about that sound futuristic but are happening now.

Thanks in advance!

The post People Talk About Things That Sound Futuristic but Are Happening Right Now appeared first on UberFacts.