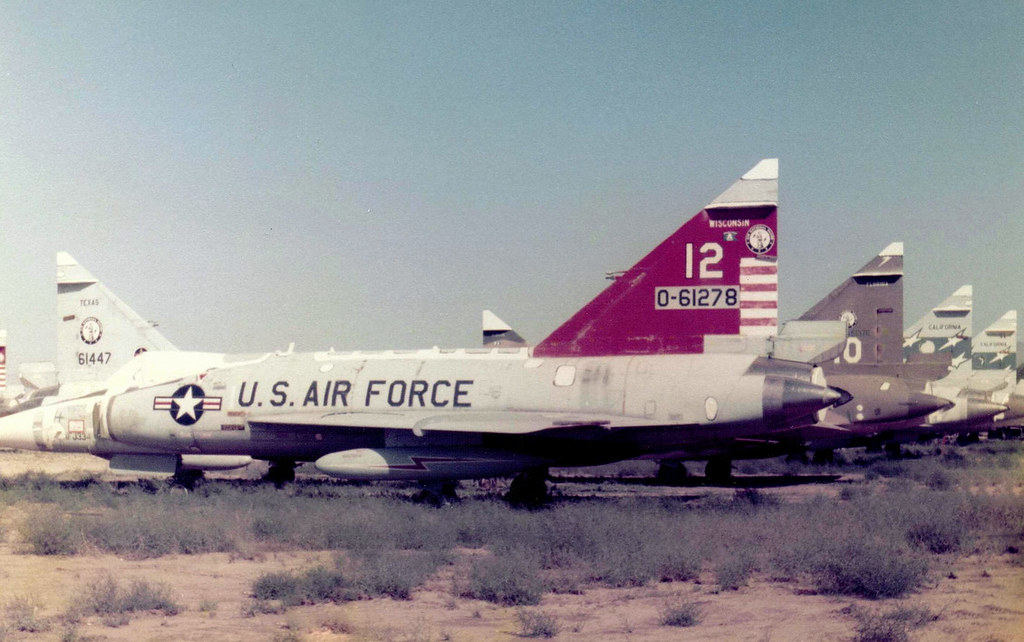

Perhaps you got a toy drone in a White Elephant exchange at work. Or a neighborhood kid crashed one in your backyard. Maybe you’ve used one yourself to take wedding or real estate photos. It feels like something out of Asimov or Jules Verne, but it’s 2021 and the hot topic in the military is what to do about drones.

Specifically: swarms of them.

In a recent article for Forbes, David Hambling, the author of of Swarm Troopers: How small drones will conquer the world, lays out this military conundrum for the lay-reader.

Image credit: Indian Army via Forbes

The problem is both simple and complex.

Simply: The more outnumbered humans become in battle, the harder time they will have reacting to threats. In the future, humans won’t be able to keep up.

We get tired. We can be distracted. We have consciences, most of the time.

But just how much autonomy do we want to hand over to machines? We all know about The Terminator and I, Robot, right?

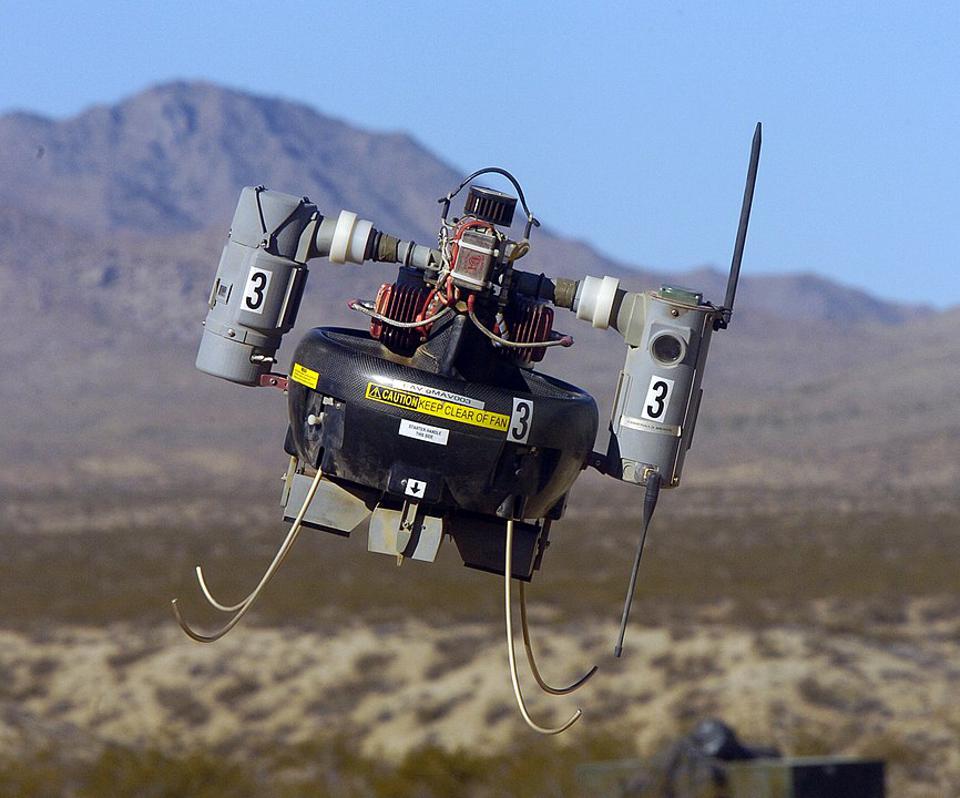

Image credit: U.S. Navy via Forbes

That is exactly the question military leaders around the world are grappling with.

General John Murray, who leads the U.S. Army Futures Command, believes it’s time to turn over some, but not all, of the control.

These require meaningful human control over any lethal system, though that may be in a supervisory role rather than direct control – termed ‘human-on-the-loop’ rather than ‘human-in-the-loop’ … Pentagon leaders need to lead a discussion on how much human control of AI is needed to be safe but still effective, especially in the context of countering new threats such as drone swarms.

Such a move, to allow humans to supervise but not fully control drones, would require the government to change current rules around their use and deployment.

But General Murray thinks this is going to be necessary because:

Faced with large numbers of incoming threats, many of which may be decoys, human gunners are likely to be overtaxed.

Unsurprisingly computers are just faster and better.

It seems our humanity gets in the way. Research shows:

Human operators kept wanting to interfere with the robots’ actions. Attempts to micromanage the machines degraded their performance.

Then there’s the brain versus microchip processing speeds.

“If you have to transmit an image of the target, let the human look at it, and wait for the human to hit the “fire” button, that is an eternity at machine speed,” said one scientist, speaking on condition of anonymity. “If we slow the AI to human speed …we’re going to lose.”

The threat that governments are most concerned about, massive drone swarms, is already very real.

Military swarms of a few hundred drones have already been demonstrated, in future we are likely to see swarms of thousands, or more. One U.S. Navy project envisages having to counter up to a million drones at once.

China is known to have a drone swarm launcher ready to go, and other nations may not be far behind.

Drone swarms present a clear and present danger, a new kind of weapon of mass destruction.

Analysts can use computer-generated swarming algorithms based on swarming and flocking patterns of birds and insects to anticipate attacks and design counter measures.

And billions is being spent on new technology to combat the drones, including an anti-aircraft vehicle called IM-SHORAD, but without machines to control our defenses, it may not be enough.

At this point, we have 2 options: we can try to pass a treaty along the lines of the nuclear proliferation treaty that limits the creation and use of nuclear weapons, or we can try to fight like with like.

The European Parliament supports a full out ban.

“The decision to select a target and take lethal action using an autonomous weapon system must always be made by a human exercising meaningful control and judgement, in line with the principles of proportionality and necessity.”

The other line of thought is that these machines could actually be more ethical in the long-term, due to their lower margin of error.

So outlawing them altogether may not be the right answer.

Unlike with nuclear weapons, where a lot of people have them stored but know that using them would result in retaliation, AI is a bit of a gray area. It can be built and programmed and owned without its very nature being destructive, which makes it harder to control.

If AI-controlled weapons can defeat those operated by humans, then whoever has the AIs will win and failing to deploy them means accepting defeat.

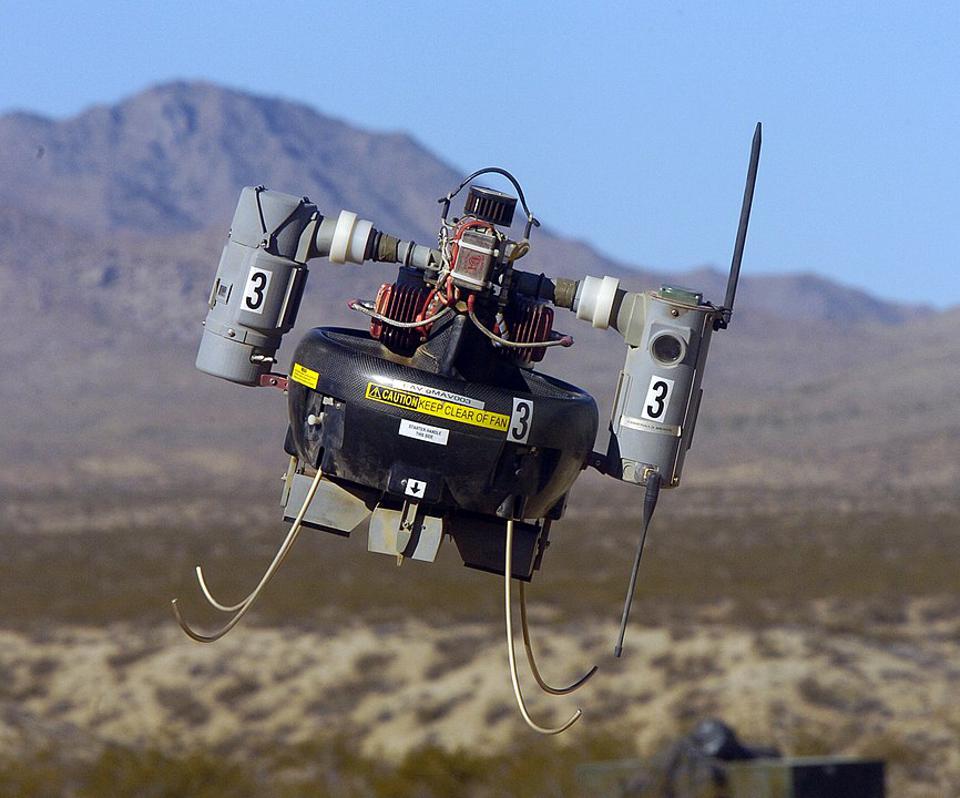

Image credit: YouTube via Forbes

The argument over what to do has dragged on for years, but the technology is here now, without a clear answer.

At this rate, large-scale AI-powered swarm weapons may be used in action before the debate is concluded. The big question is which nations will have them first.

The whole debate reminds me of the brilliant 1985 sci-fi novel novel Ender’s Game by Orson Scott Card (which sadly was not done justice by the 2013 film of the same name).

The time to make a decision and prepare for these types of attacks is now. And it sounds like unless we’re going to train up a super-genius army of video-game playing children to counter other countries drone attacks, we might need to let out the leash a bit on the machines.

The post A General Suggests We Should Let Drones Do More Work the Military appeared first on UberFacts.